“How to Create a GPR” Part 3: Industrial Design

In the early 1900s, imagining a world with billions of cars would have been hard. Today we take it for granted. But there was a world before cars. Now it’s hard to imagine a world without them.

Previous Posts in this Series:

In the early 1900s, imagining a world with billions of cars would have been hard. Today we take it for granted. But there was a world before cars. Now it’s hard to imagine a world without them.

Elon Musk and Vinod Khosla both claim that we will someday have billions of general-purpose robots working with and among us. This is also hard to imagine. But it may be necessary.

As birth rates plummet, and populations decline and age, the work that needs doing will increasingly have fewer people to do it. There won’t be enough of us to do the things that keep civilization working. We will need machines to do everything that needs to be done.

Billions of general-purpose robots doing all the things will change the world in a more profound way than cars did. We are at the stage now when early versions of these machines are being designed and put to work. There are many organizations looking to build this future with differing perspectives on what is important to do and in what order.

This post is about how we thought about the design of Phoenix, Sanctuary’s most recent general-purpose robot.

What is a General-Purpose Robot?

At Sanctuary, general-purpose means a robot physically capable of doing most of the world’s work. A general-purpose robot must be able to be anything from an accountant to a zoologist. We don’t want to make design choices that limit what work these robots can physically do.

This requirement places stringent constraints on the physical form of the machine. The ideal physical form for doing the sort of work we want and need done is human-like. Our world is, and always will be, designed for people. The objects and environments in our world are designed to be lived in and acted upon by us. A machine that is not human-like will not be able to do many of the tasks we care about.

Damion Shelton, CEO of Agility, puts it this way: "I have a Roomba, but, it doesn’t matter how good your L.L.M. is, there is no piece of code that is going to result in it driving to my front hall and putting my HelloFresh box in the recycling bin." (L.L.M. stands for Large Language Model, which includes as an example OpenAI’s GPT-4). The point being that Roombas are not physically capable of performing that task (or anything other than the single specific thing they are designed for)... and no software can change that.

What Do People Physically Do When We Work?

The US Department of Labor has answered this question, in the form of the O*NET system. O*NET characterizes all the things that are necessary, physically and cognitively, to deliver work across more than 1,000 occupations in the US economy.

This system contains data sufficient to rank the physical and cognitive attributes people bring to work in order of importance. Here we’ll just deal with the physical side, as the cognitive side is a software thing, and in this post, we’re just focusing on robot hardware and design.

The top three most important physical requirements to do work according to O*NET, in order of importance, are:

Human-like senses. The robot must be able to sense the world the same way we do.

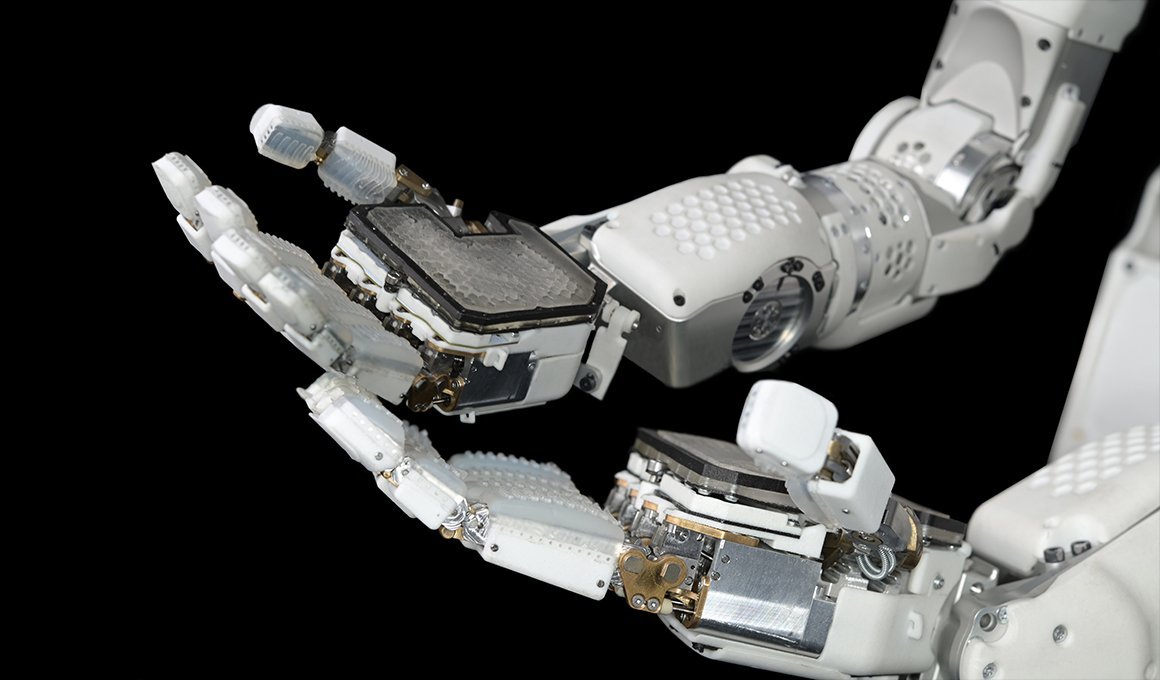

Highly capable hands. Dexterous in-hand manipulation of things is required for more than 98% of all occupations.

Mobility. Being able to move around from place to place (flat floors, steps, stairs, ladders), get in and out of things (cars, seats, rooms), and the like.

Doing work requires our robot to be able to sense the world around it the same way we do. While there are jobs that require smell and taste, most work can be done with a robot with just four human-like senses: vision, hearing, proprioception (knowledge of where its body is in the world), and touch. Touch is a very important sense, on par with vision, because the human-like use of hands requires touch.

Previous posts of the series have already… touched… on the significance of hands, but it’s worth reiterating here. While there are many economically important tasks that do not require the capabilities of the human hand, these number less than 2% of all tasks. Can you build a vibrant business building robots that only address these 2%? Yes, of course — 2% of the global economy is a very big number! But that’s not our goal here. We want to think about what general-purpose robots should look like. It is clear that in order to do nearly any job, you need robotic hands that are functionally very similar to the human hand.

While it is possible to build grippers of increasing capability, say with three or four fingers, or lacking the shape of the human hand, these types of design decisions dramatically degrade generality. To be truly general for work, the hand must be a mimic of the human hand, with four fingers and an opposable thumb, with 20+ degrees of freedom per hand. Nearly everything in the world of work, from doorknobs to keyboards to consumer products, is built to conform to the specific details of how our hands work.

In addition to the need for hands, work requires that the hands be able to do a variety of tasks in environments that are designed for people. A significant number of the occupations that require hands, also require two-handed manipulation of objects and environments.

What about mobility? Can we get away with tethers for power and network? Can the system be on a wheeled base? The answer to both of these questions is no. Perhaps less obvious than hands, but two-legged (sometimes called bipedal) mobility is required for many different kinds of work. Wheels are fine for certain tasks but limit generality. Tethers significantly reduce what a robot can do.

About 65% of O*NET occupations require mobility, and roughly 20% of them require two legs. This is not nearly as lopsided as was the case with hands. Most of the world’s work can be done by robots with good hands and poor mobility. But robots with poor (or no) hands and good mobility can do very little useful work. Hands are much more important than mobility when it comes to general-purpose work.

Industrial Design

Given these constraints, there is still an enormous design space to work within. We could design dozens of different robots that met all these requirements and looked very different.

Phoenix is the sixth major design we’ve done on the basic humanoid form factor. Of the previous designs, we liked the way the immediately previous one — the fifth generation, which was up until recently, our workhorse — looked the best. We spent a lot of time thinking about why, and came up with the following explanation. While there are many types of reactions people can have to a robot, there were two of them we felt were most important, and they tend to pull in opposite directions. These two are ‘dimensions’ are (a) is the robot endearing, or said a different way, do I like being around the robot, feel a connection to it, and want it to do well and succeed, and (b) does the robot look competent, or does it look like it can do serious work.

In science fiction, robots tend to be one or the other, but rarely both. Go through all the scifi robots you can think of, and observe they tend to fall into one of those two categories (there are some exceptions). This also tends to be true in reality. Few people find industrial manufacturing robots endearing, but they certainly look (and are) competent. The worst case, which unfortunately many humanoid robots, including some of our previous iterations, fall into, is when a robot looks neither endearing nor competent.

In the industrial design of Phoenix, we wanted to maximize these two qualities. But in some sense that type of design is a veneer. Not only does the robot need to look competent, it also has to be competent. It needs to be able to deliver on the promise, and be able to actually do real work. Also, the design needs to be manufacturable with the technologies available to us today. Many science fiction robots, or robots visualized solely using computer graphics (this latter category is unfortunately a big part of the marketing of some other humanoid companies), could not be built in real life, at least with technologies available today.

To iterate towards a design that was manufacturable, endearing, looked competent, and delivered on the promise by actually being highly physically capable, we felt that the most important starting points were to get the overall dimensions (such as the height and overall body proportions) and the design of the head correct. We had the advantage that the hands themselves were relatively fixed due to technological constraints, which meant the rest of the system had to conform to the hands.

On the topic of the robots’ dimensions, we built a VR environment where Sanctuary employees could stand in a room with simulated robots of different sizes and shapes and virtually morphed these to find what people liked. This was a fascinating exercise. One thing we found was that on average men felt threatened by robots that were taller than they were, but women did not. It was clear that the overall dimensions, including height, shoulder-to-waist ratios, and limb lengths, were extremely important design choices. We ended up choosing the height and proportions of Phoenix to walk the line between looking competent and ready for work, but not crossing the line to intimidating or threatening.

For the design of Phoenix, we collaborated with Swedish industrial design firm, MERPHI. MERPHI’s co-founder and CTO, Mehrdad H.M. Farimani, felt that to achieve the objective of the design being endearing, the robot’s design should look imperfect, which is a fascinating premise that we initially did not buy into, but over time looks like a very important idea.

“People are fallible and everybody has flaws. For us to relate to a machine and create a relationship and attachment to it, we also need that thing to have flaws … for design, it is an opportunity to create a relationship with people. Therefore, in the design of Phoenix, we tried to apply ‘intentional imperfection.’ And I believe that is one factor that makes the look of Phoenix attractive to people.”

Getting to a Billion General-Purpose Robots

We see a future where the descendants of Phoenix do much of the world’s work. People will never run out of things that we want done. Having exceptionally skilled, plentiful and inexpensive labor available will mean that the types of things we can accomplish as a civilization will accelerate. From the mundane to the cosmic (think space exploration), being able to print generally intelligent labor transforms civilization in a way that no previous technology has done. The promise here naturally leads to billions of these systems, and a new type of machine that we will always want more of.

Making this future real is not just about how well the technology functions. In order for people to want this future, it is absolutely critical that people feel safe and trust being around our technology.

Farimani describes the challenge of designing robots that people trust and want to work with:

“In the design of a robot, there is a fine line between people liking it and feeling threatened by it… Usually, when I have the first contact with the clients, they talk a lot about how they want their project to end up like an Apple product or a Tesla product, so this is a red flag for me and it is a sign that the client is not visionary enough. But the Sanctuary team had its own unique vision.”

Sanctuary AI is pioneering human-like intelligence in general-purpose robots. This also makes us influential industry trailblazers of humanoid design. We are creating a solution to work that will impact generations to come. Phoenix is so much more than a piece of technology or a product. Our robots and their Carbon-based minds are going to change the world and become ingrained in the infrastructure of society and the economy. The magnitude of this is felt in the design of Phoenix as well. It is being designed to stay relevant through time.

“All in all, I think this is what differentiates Phoenix from other robots. We did not design a fashion that is contextual, but a skin that can adapt to different fashions … Phoenix is designed to be timeless. It does not follow design trends or even retro trends. It has its own unique look, the same as the vision of Sanctuary AI.”

This post was written with interview quotes from Mehrdad H.M Farimani, Co-Founder & CTO of MERPHI Design. The information he provided was on behalf of the entire MERPHI team, who have all directly and indirectly supported the collaborative project. Those team members also include Filip Sperr, Philip Berlin, Amanda Cederhom, Minnie Barnaby, and Melina Kazemi.

"How to Create a GPR" Part 2: A Sense of Touch

The sense of touch is hugely important. The human hand has orders of magnitude more sensory (afferent) neurons coming from tactile receptors in the hand to the brain than it does motor (efferent) neurons coming from the brain to the muscles. It turns out, the sense of touch is critical for dexterous manipulation. For example, have you ever wondered how you are able to find objects in your pocket or in the dark, or how clumsy your hands are when they are numb from the cold? The sense of touch is how.

Previous Posts in this Series:

As mentioned in the first post of this series, human-like senses are one of three critical components needed when creating humanoid general-purpose robots.

Sanctuary AI is focused on making our technology as capable and versatile as possible. We have state of the art equipment that brings sight, hearing, and speech capabilities to our pilots and to Carbon, our AI control system. Extending our own sense of sight, hearing, and speech is something with which most of us are very familiar. We are already used to using technology to break down physical barriers and extend our senses across the globe through home security systems, video calls, social media live feeds, and video games on a daily basis. Those concepts are no longer extraordinary, but what is novel is the idea of adding the sense of touch.

The sense of touch is hugely important. The human hand has orders of magnitude more sensory (afferent) neurons coming from tactile receptors in the hand to the brain than it does motor (efferent) neurons coming from the brain to the muscles. It turns out, the sense of touch is critical for dexterous manipulation. For example, have you ever wondered how you are able to find objects in your pocket or in the dark, or how clumsy your hands are when they are numb from the cold? The sense of touch is how.

For our pilots, we use Haptx glove technology to send them sensory input from the hands of the robots as they interact with objects in both the physical and virtual world. In order to capture touch data from the hands on Phoenix™ robots, we use technology from a company Sanctuary AI recently acquired, Tangible Research. Tangible is best known for its industry-leading haptic technology for robotic hands and is a key part of our ecosystem.

Nearly all work is delivered through hands–in fact, 98.7% of all work requires fine manipulation. Most companies developing humanoid robots focus primarily on gross motor skills, like walking. However, Sanctuary AI has taken a unique approach by first tackling the more difficult challenges, like creating dexterous human-like hands. Our dedication to creating the world’s best robotic hands is not something we take lightly: in this video we posted, you can see just a small snapshot of the calibration and testing process for one of our hands right before it gets installed on Phoenix. Our “hands first” hardware approach creates a direct path to being able to assist with the widest variety of tasks, including (but not limited to) retail work, medical assessments, cleaning, warehouse activities, hospitality/food preparation, maintenance with hand tools, lab tasks, agriculture-related labor, and customer service, and is one of the leading reasons why we have been able to deploy technology to customer sites.

Tangible Research aptly fits into our strategy by providing Sanctuary AI robots with touch sensors that can transmit the same kind of information to the AI control system that a person's fingers do to their brains. As said by our CEO, Geordie Rose, “We acquired Tangible Research to help us implement the best possible haptic technology into our systems. You may have seen Tangible technology already, as there is a widely shared video of Jeff Bezos piloting a system Tangible developed. Sanctuary AI robots can see, hear, feel, and to a certain extent, understand the world. But this is not enough. They also need to be able to use their hands just like we do…Robots without human-like hands simply can’t do much. In some real sense, from the perspective of doing real work, the rest of the robot is only there to get the hands to do the work that needs doing.”

To learn more about other companies in the Sanctuary AI technology stack, please read “Building an Ecosystem Designed to Build a Human-Level AI”.

"How to Create a GPR" Part 1: 5 Reasons Why We Started with Hands

If you want to build a humanoid robot that is truly general-purpose, you can’t do it without dexterous human-like hands. Hands are the key to work, whether we’re talking about tasks in workplace settings or the domestic activities we do in our day-to-day lives, like retrieving the mail, turning on lights, preparing food, and cleaning.

Previous Posts in this Series:

If you want to build a humanoid robot that is truly general-purpose, you can’t do it without dexterous human-like hands. Hands are the key to work, whether we’re talking about tasks in workplace settings or the domestic activities we do in our day-to-day lives, like retrieving the mail, turning on lights, preparing food, and cleaning.

We take for granted the complexity and incredible capabilities our hands provide us. They are the primary tools we use to act upon our thoughts and engage with the real world. Approximately one-quarter of all our bones are located in our hands–one hand alone has 27 bones. To translate the anatomical marvel of our hands into an equally as capable robotic version may seem overwhelmingly challenging, but it's absolutely necessary to create a humanoid robot with true general-purpose use. So let’s go “hands-on” and discuss the top-five reasons why gross motor skills alone simply aren’t enough:

1. Fine manipulation is required for 98.7% of all work

According to the U.S. Bureau of Labor Statistics, “fine manipulation is defined as picking, pinching, touching, or otherwise working primarily with fingers rather than the whole hand or arm.” That type of fine manipulation is required for nearly 99% of all work and spreads across virtually all industries. If people need fine manipulation to perform 99% of all work, then humanoid general-purpose robots also need fine manipulation to assist with 99% of all work. If they can’t use their fingers, then their potential to contribute to the workforce is limited. With birth rates declining, populations aging, and labor shortages worsening, there is a dire need for technology to fill gaps in the workforce–gaps that can only be filled by humanoid general-purpose robots with human-like hands.

2. The significance of hands, tools, and early technology

Before we had heavy machinery, tools, and computers, all we had were rocks and sticks. Our ability to utilize tools has always set us aside from other species and propelled our advancement as a civilization. Our dexterous, capable hands allowed us to pick up rocks to bash things, sharpen sticks into spears, and create rock flakes for weapons–these were our first technologies. Hands have always been what we use to interface with the world and utilize tools to create even better tools that advance civilization. Naturally, the tools we have made are designed for our hands and, as a result, in order for someone–or something–to effectively use them, they also need human-like hands.

But it doesn’t just stop at tools. Think about all the handles, buttons, knobs, switches, levers, dials, touchscreens, trackpads, joysticks, scroll wheels, keyboards, trackballs, and computer mice that make up every control panel or interface to a piece of equipment, machinery, or technology that we interact with as people in the world. The size of the investment we have made as a civilization into architecting the world to be an interface to human-like hands is almost immeasurable. As such, having human-like hands on a robot that is truly general-purpose is a gigantic advantage.

3. A worker’s value is not primarily in their legs

There are millions of jobs that don’t depend on bipedal mobility, or the ability to walk upright on two legs like a person. According to the United States CDC, 11.1% of the population has a mobility disability, which is classified as having serious difficulty walking or climbing stairs. There are many wheelchair, scooter, cane, and walker users actively participating in the workforce and bringing tremendous benefit to the economy because having value as a worker is not primarily defined by legs.

4. If legs were the key to work, bipedal robots would already be everywhere

Bipedal robots that can walk on two legs have been around for several decades. Yet, we still aren’t seeing these types of robots assisting with work in the real world in any meaningful numbers. Legs alone just aren’t the key capability needed, not to mention the walking ability of many bipedal robots is still quite limited/clumsy, making it so the legs are not yet the force multiplier they one day could be. I wonder if the focus on legs is a consequence of our tendency as people to work on a problem from “the ground up.” Given that the legs on a bipedal humanoid robot are the nearest thing to the ground, this logic would have us address that problem first. Sure, they’re a part of a complete humanoid robot, but they are not the most crucial element needed to unlock work.

5. Hands are the key to work; our successful commercial deployments prove this theory to be true

Sanctuary AI general-purpose robots feature state-of-the-art robotic hands: in fact, we believe they are the best robotic hands available. It’s because of our incredible hands that Sanctuary AI has already been able to successfully deploy robots to customer sites to do work. Sanctuary AI robots can perform hundreds of tasks, some of which are accomplished through gross motor movement, but most are performed with the highly dexterous hands on the robots.

Hands are what provide Sanctuary AI robots adaptability in work settings. Environments can change, objects can move locations or even change sizes, but hands can adjust to dynamic variables in order to get the job done.

While having dexterous human-like hands is a necessity, they are not the only element that is needed to create humanoid general-purpose robots that can do work. Human-like intelligence is also something that is an absolute necessity, among other things. We’ll have more to share in future blog posts and videos on this and other topics.

How to Create a General-Purpose Robot: A New Blog Series

In the early days of Sanctuary AI, we were carving out an entirely new industry that the general public only took slight interest in. This year that has changed. There has been a huge shift in interest towards both AI and robotics. The need to explain why humanoid general-purpose robots (GPRs) are necessary has lessened: many more people are aware of the labor challenges organizations are facing and the unsettling implications worker shortages have on sustaining our way of life. But on the flip side, the need to explain what humanoid general-purpose robots are and how they are made has grown.

In the early days of Sanctuary AI, we were carving out an entirely new industry that the general public only took slight interest in. This year that has changed. There has been a huge shift in interest towards both AI and robotics. The need to explain why humanoid general-purpose robots (GPRs) are necessary has lessened: many more people are aware of the labor challenges organizations are facing and the unsettling implications worker shortages have on sustaining our way of life. But on the flip side, the need to explain what humanoid general-purpose robots are and how they are made has grown.

We love that people are interested in our work. And as we’ve stated publicly many times before, we want to help everyone to work more safely, efficiently, and sustainably. As a company that is at the forefront of the humanoid general-purpose robot industry, we have a unique opportunity–and what we feel is an obligation–to help educate people on general-purpose robot technology and what we have learned through our work. That is why we are releasing a new blog series titled “How to Create a Humanoid General-Purpose Robot.”

“How to Create a General-Purpose Robot”: Series Explained

Over the next few weeks, we will be publishing a series of blog posts that explain our methodology for creating humanoid general-purpose robots. The series will cover what we see as the three essential components of creating a humanoid GPR: human-like intelligence, human-like form and function, and human-like senses. Although some of these parts are more challenging to create than others, all of them are of equal importance and have an interconnected relationship.

While there are companies that have successfully created components of the triangle, like human-like legs and senses, no one has created human-like intelligence yet and very few companies are focusing on all three of these components to the same extent as we are. Here at Sanctuary AI, we are mindful not to fall into the special-purpose trap that would have us fail to accomplish our mission. Special-purpose technologies have a limited work scope; general-purpose has a broad, general-use. You can see the impressive breadth of tasks our 5th-generation humanoid general-purpose robots can complete in this video entitled “60 Tasks, 60 Seconds.”

Building an Ecosystem Designed to Build a Human-Level AI

Around 2009, when I was at D-Wave, I met and pitched investment in the company to Peter Thiel. At some point he asked me a question along the lines of “tell me something that’s true that very few people agree with you on.” Apparently he asks this of everyone.

Around 2009, when I was at D-Wave, I met and pitched investment in the company to Peter Thiel. At some point he asked me a question along the lines of “tell me something that’s true that very few people agree with you on.” Apparently he asks this of everyone.

I remember this because the answer I gave had nothing to do with D-Wave or quantum computation. My answer was that human-level AI will be built by 2030. I’d just spent an hour describing the most advanced quantum computation project on Earth. You’d think I would be able to answer with something related to that… but no. I’d become obsessed with what I had started thinking about as The Big Problem — how to understand our own minds well enough to build one.

Over the 14 years since then my colleagues and I have been obsessively grinding away at The Big Problem, first at D-Wave, then at Kindred, and now at Sanctuary. Every year we have methodically been taking two steps forward for every one step back. While as I age I get less sure of basically everything, I still (somewhat hesitantly) think my exuberance in 2009 about when we solve The Big Problem was justified. I still think we will get there by 2030, even after working on the front lines of this problem for more than a decade and understanding in a visceral way how hard it is. Although I don’t know if today I could give Thiel the same answer as I did back then. A lot more people agree with me now than back in 2009.

It Takes a Village

I was born and spent my first few years in Tanzania with the Swahili-speaking Masai. My first spoken word was apparently dawa, which means medicine. Perhaps related, I also almost got eaten by army ants at least once. Good times.

I remember some Swahili but not much. One of the phrases I remember is Asiye funzwa na mamaye hufunzwa na ulimwengu, which roughly means “it takes a village to raise a child”. I don’t know if this is actually true for people. But it sure is true for technology. Behind the computers, networks, and phones we take for granted are thousands of people, all contributing to the creation and maintenance of these things. We have a tendency to idolize individuals, like Steve Jobs and Elon Musk, and there is no doubt those two are unique. But the reality is that it really does take a village to create advanced technologies.

When I was at Kindred, one of my investors was Pierre Lamond. Pierre is legendary in the venture capital community. In 1967 he became CEO of National Semiconductor, and then a partner at Sequoia from 1981 through 2009. He would tell fascinating stories of the early days of Sequoia’s investment strategies in Apple and Atari. Back then most of the parts of the systems Apple and Atari were selling had few suppliers. Things like memory, motherboards, processors, keyboards, and screens were all new and both companies struggled to consistently find good parts and suppliers for their products. So what Sequoia did is fund the entire home computing ecosystem. They backed dozens of companies that were building the unsexy parts that Apple and Atari needed to thrive.

This strategy was amazingly effective. A flywheel began to turn where Apple and Atari got top notch parts, making their products great, which meant more people bought them, which caused capital to flow into the entire Sequoia ecosystem. This changed how computers got adopted into our homes. Make no mistake, this could have gone very differently. If Sequoia had not pumped capital into all those companies you probably couldn’t name, Apple and Atari — and by extension the entire computing industry — would have had unreliable and expensive products very few people would have put up with.

Pierre’s stories about the early days of the computing industry resonated with me. We faced the same kinds of challenges at D-Wave. The parts required to build a quantum computer either didn’t exist, or they were not good enough. I had spent a good part of my life there investing in things like dilution refrigerators and superconducting chip fabrication. Looking back I wish I’d met Pierre ten years earlier. As an aside, Pierre was chairman of Cypress Semiconductor for many years, the place where we built D-Wave’s fabrication capability — but we invested there years after he’d already left.

When we started Sanctuary back in 2018, the Sequoia strategy was very much on my mind. We had set ourselves the mission to be the first organization in the world to create human-level AI. We committed to doing so by thinking of that AI as the control system for a humanoid robot. This vision of what we wanted to build entangled two different grand challenges. Not only did we need to build a new kind of robot that could move with the grace and fluidity of a person, but we also needed to build a software control system for it that had most of the properties of the human mind.

So how to proceed? As soon as the ink was dry on the incorporation papers, we started trying to figure out what the ecosystem for building a human-level intelligence in a humanoid robot should look like. What was our ‘Sequoia strategy’? What parts does something like this need to have? Who are the organizations that we should bet on to provide them? What should we commit to building ourselves? When things got confusing, we tried to build analogies between what we wanted to build and the very early days of the computing industry.

The Start of an Ecosystem for Building Human-Level AI

Complex technologies are built by coalitions of organizations working together. When new categories arise, such as home computers, quantum computers, or human-level AI, the ecosystem around them needs to be actively built by strategic investment. People who see something new and important coming have to capitalize and support organizations that could build currently non-economic parts of the new thing. Market forces don’t work in this case because the product doesn’t exist yet, and therefore demand for its parts doesn’t exist yet, and therefore the suppliers of those parts don’t have an economic incentive to build them.

We’ve drawn on our experiences and the lessons learned from the early days of the semiconductor industry to start building an ecosystem to support delivering against Sanctuary’s mission. Here we’d like to introduce some of this ecosystem. Each of these companies are working on critical parts of one or both of the two grand challenges we must overcome to reach mission success.

To start, some context on our approach to building human-level AI. We have committed to a set of principles that include thinking of building human-level AI as being the same as building a control system for a humanoid robot. In the 1980s and 90s, brilliant work was done on what robotic control systems of this type would need to look like. When we started seriously thinking about taking on this challenge, one of the first things we did was go back and read what people like John McCarthy, Nils Nillson, Marvin Minsky, Rodney Brooks, and Alan Newell were thinking needed doing. It’s unfortunate that the newer generation of AI researchers is largely unaware of this work. Like neural nets themselves, ideas can be good ideas but just too early.

One of the key features that makes controlling robots in the real world distinct from many other uses of AI is that, at least if you believe the above cast of characters, there is a requirement for these control systems to perceive, act on, and think about the world symbolically. That is, whatever it is that their minds are doing, they are doing it with symbols. The human mind uses a specific set of these symbols, and those are the ones robots with human-like minds should use.

Because of this one of our first choices for an ecosystem partner was Cycorp. Cycorp is the longest running AI organization in the world. Their Cyc technology traces its roots back to 1984. Cycorp’s leader, Doug Lenat, is a legend in the field of artificial intelligence. He has steadfastly championed symbolic reasoning and logic as a path to human-level AI. You can learn more about him and Cycorp on Lex Fridman’s podcast. The Cycorp team under Doug’s leadership have built what we believe is one of the most important parts of this puzzle — the part that uses logic and reasoning on symbols that a mind uses to understand the world.

Cycorp’s people have thoughtfully engineered tens of millions of logical rules intended to model what we think of as common sense. Cyc reasons using common sense both deeply and quickly, using over a thousand specialized reasoners which attack each problem as a team.

What is common sense, exactly? What we mean by this needs to be written down somewhere. This is excruciatingly difficult, and I think, outside of the scope of statistical approaches such as Large Language Models (LLMs). I don’t think scaling LLMs will produce common sense.

One of the things Doug said that stuck with me is that Cyc encodes all the things that never get written down that we all take for granted. We are betting that Cyc and LLMs working together will be much, much greater than the sum of their parts. And since Cyc operates by logical pro- and con- argumentation, unlike LLMs, it can fully explain every step in its reasoning. This is important for any AI to be trustworthy. This is very important when AI controls machines. Doing this right is very important to us.

Sanctuary and Cycorp have worked closely together for several years. We have deeply integrated Cyc into Sanctuary’s technology stack. Our business relationship includes an equity position and an exclusive license to Cyc for humanoids.

We have seen remarkable success and validation of our intuition on Cyc’s central role in delivering human-like AI. Here’s Doug on the partnership: “Cycorp’s mission is to deliver on the founding promise of the field of AI — the creation of technologies that model and understand how the world works, as deeply and broadly as people do, enabling them to responsibly, sanely and ethically solve problems like we do. I am thrilled to partner with Sanctuary to push towards mission success, and together build the most important technology in history.” Amen to that!

Apptronik is one of the few organizations in the world that could realistically produce robots that can move with the function, mobility, grace, and fluidity of the human body. When we were looking for suppliers of physical robots and their parts, we looked closely at all the usual suspects. Apptronik was superior to all of them in ambition, technological capability, and alignment with our mission. Our first joint project back in 2019 had this over the top description of what success would look like: “A robot like nobody has ever seen. Organic curves, delicate generative structure, clean and deliberate lines, delicate yet nimble motions. A living triumph of modern artwork and precision motion control.” You can see some of the progress they are making on building embodiments for us.

We have now worked together with Apptronik for more than three years, and have invested in their success and mission, with an equity position and a board seat. From Jeff Cardenas, CEO: “Apptronik is a world leader in building advanced bipedal walking robotics systems designed to work with and alongside people. Sanctuary is a key investor and supporter of our company from the early days. We are very excited to have the opportunity to work with their team and CEO, Geordie Rose, who has been providing us with his expertise as a member of our board of directors. Apptronik’s mission is to build the world’s best humanoid robots, and working together with Sanctuary, we have dramatically accelerated progress towards both our and their mission success.”

Robots require models of the world personal to their minds. These models are what they use to think about what to do, before they do it. While we use many different technologies to build world models that don’t require our support and investment, such as Blender, DeepMind’s Mujoco physics engine, and Epic’s Unreal Engine 5, some of our requirements do require investment. One of the organizations working on aspects of building world models in our ecosystem is Common Sense Machines, or CSM. CSM is building state of the art systems for producing 3D assets for world models. We have invested in CSM over others in the space as we are very aligned with us on what is required to build human-level AI and how to get there.

Max Kleiman-Weiner, co-founder and CEO: “Learning generative world models is a critical component in successfully creating artificial general intelligence. It’s comparable to how a child learns about the world through experience. We give AI that ability. The way our technology is being used by Sanctuary is aligned with the original and greatest goal of the field of artificial intelligence — building machines that think like we do. We are very excited about being part of what Sanctuary is building.”

One of the key elements of our approach is learning from demonstration, where the demonstration examples are provided by a human in the loop control paradigm called analogous teleoperation. This control style outfits a person in a thing we call a pilot rig, which transmits the sense data from the robot to the person, and converts that person’s actions into actions the robot performs.

Contoro is an Austin-based robotics company that manufactures high precision robotic systems originally designed for injury rehabilitation. We saw the possibility of using them instead as part of our pilot rigs. Youngmok Yun, CEO, Contoro: “We believe our technology will unlock the unlimited potential of teleoperation – the ability to remotely control robots from anywhere in the world. Contoro and Sanctuary AI have the same desire to solve labor problems by combining robotics and human-like intelligence.”

Another important part of the teleoperations system are haptic gloves that both transmit the sense of touch to the pilot and allow the pilot to, with great precision, manipulate the world. The hands on Sanctuary robots are the most advanced robotic hands ever built, in the sense of mimicking the human hand. We use HaptX gloves for this purpose, and have built a very healthy partnership with HaptX for this use case. Jake Rubin, founder, chairman and CEO, HaptX: “The gloves we’ve created at HaptX feature hundreds of microfluidic actuators, so when pilots interact with virtual objects, they feel completely real. Our partnership with Sanctuary will help push the boundaries of teleoperation capabilities for a true-to-life embodiment experience.”

Related, touch sensors on the hands of the Sanctuary robots ideally would transmit the same kind of information to the AI mind that our own fingers do to our own minds. We acquired Tangible Research to help us implement the best possible haptic technology into our systems. You may have seen Tangible technology already, as there is a widely shared video of Jeff Bezos piloting a system Tangible developed. Jeremy Fishel, founder and CTO: “We believe one of the reasons robotic dexterity has been so far behind human ability is because the sense of touch is quite difficult to implement. Integrating our tactile sensors into the phenomenal hand hardware Sanctuary has created, will help the robotic hand capabilities increase exponentially and become more human-like.”

Sanctuary robots can see, hear, feel, and to a certain extent, understand the world. But this is not enough. They also need to be able to use their hands just like we do.

Work is delivered by hands. We use them all the time for nearly everything we do. More than 98% of all work requires fine manipulation. This is a key reason that the labor market is so much bigger than the current robotics market. Robots without human-like hands simply can’t do much. In some real sense, from the perspective of doing real work, the rest of the robot is only there to get the hands to the work that needs doing.

The human hand is a marvel. Robot hands that mimic human hands are very hard both to build and to control. To help with this, we acquired the entire intellectual property portfolio of Giant AI. The team at Giant pioneered a set of techniques for using vision to help robots grasp and manipulate objects the way we do. The acquisition of these assets strengthened our already strong and rapidly growing intellectual property position. Being first mover in this new category gives us the strategic advantage of owning many foundational technologies that are necessary for anyone to make this type of technology work in commercial settings.

While building humanoid robots is more straightforward than building their minds, there are still areas that require long-term investment. One of these is in actuator technology. We have developed a relationship with Exonetik to investigate the use of a new approach to building actuators that might shine in the humanoid context. Pascal Larose, co-founder and CEO: “Exonetik is developing a novel type of actuator for Sanctuary’s use case that enables breakthroughs in safe, efficient, powerful, and graceful human-like movement. The use of our actuators in Sanctuary general-purpose robots will be huge for both organizations, and for all Sanctuary’s customers.”

At the intersection of the mind and the body lies the need for powerful, reliable networks. A fleet of robots will have many aspects of their minds in other places, running on computer networks that could be thousands of miles away. To ensure we have the best possible solutions to networking issues, Bell and Verizon have partnered with us.

John Watson, Group President, Business Markets, Customer Experience and AI, and Sanctuary board member: “Bell Ventures was founded to drive innovation and support Canadian entrepreneurs. Investing in Sanctuary AI clearly aligns with those objectives. We envision a future where Sanctuary technology can be used to help address labour shortages. The Bell 5G network will not only power the Sanctuary AI labor as a service model across Canada, but will also provide edge computing capabilities and security to other Canadian organizations utilizing their technology.”

Michelle McCarthy, Managing Director, Verizon Ventures: “At Verizon Ventures, we strive to be at the intersection of connectivity and technology. Our mission is to support startups that have developed the most cutting-edge solutions with the power to shape the future leveraging advanced network capabilities such as Verizon’s 5G Ultra Wideband network and Mobile Edge Compute. Seeing the Sanctuary AI technology deployed in a commercial setting was a major milestone not only for the company but for this emerging industry.”

Being able to build a small number of concept robots is necessary as a first step toward mission success. But ultimate success requires building complex machines at scale. Humanoid robots are roughly the same complexity to build as cars. Magna is one of the few companies in the world with the experience of producing these kinds of systems at scale, and has invested in Sanctuary. Josh Berg, Managing Director, Magna Technology Investments: “As a leading mobility technology company, Magna has extensive experience in developing and manufacturing complex systems. Factory of the future concepts such as humanoid robots certainly falls into this category and we see great potential for this technology in automotive manufacturing. Our investment in Sanctuary was a strategic move to help facilitate advancements in this area. We are excited to explore the future of this technology together.”

As the Sanctuary fleet grows, one use case is to set the goals of these robots to do work. Nils John Nilsson, one of the AI pioneers that has profoundly influenced our approach, put it this way:

“I claim that achieving real human-level artificial intelligence would necessarily imply that most of the tasks that humans perform for pay could be automated… Machines exhibiting true human-level intelligence should be able to do many of the things humans are able to do. Among these activities are the tasks or “jobs” at which people are employed. I suggest we replace the Turing test by something I will call the “employment test.” To pass the employment test, AI programs must be able to perform the jobs ordinarily performed by humans. Progress toward human-level AI could then be measured by the fraction of these jobs that can be acceptably performed by machines.”

Workday Ventures, the strategic capital arm of the enterprise cloud applications provider Workday that uses AI and ML to elevate human capabilities to make finance and HR more intelligent, joined our investor group. Barbry McGann, managing director and senior vice president, Workday Ventures: “At Workday, we are shaping the new world of work, by helping customers adapt and thrive in a changing world. More than 50% of the Fortune 500 rely on Workday to intelligently manage their talent and their financial processes. We are very excited to work with Sanctuary to help address the labor and skills challenges facing many organizations today.”

Early use cases for humanoid robots that require general intelligence will be important to get right. One of the organizations partnering to investigate a diverse set of use cases is Canadian Tire Corporation (CTC). We recently announced our first deployment at a Mark’s retail store in Langley, BC, Canada. The week-long pilot at the CTC-owned store successfully tested the general-purpose robot in a ‘real-life’ store environment with 110 retail-related tasks completed correctly, including front and back-of-store activities. Cari Covent, VP, Data, Analytics and AI, Canadian Tire Corporation: “Working with Sanctuary AI has enabled Canadian Tire Corporation to further explore cutting-edge innovations and accelerate operational efficiency. With the Mark’s pilot, we were able to focus human resources on higher-value and more meaningful work, like customer service and engagement. By making strategic investments and working with partners like Sanctuary AI, we are furthering our customer understanding, customer experience, and operational efficiencies to drive our Better Connected strategy.”

The Road Ahead

Investing in and cultivating an ecosystem isn’t in itself enough to ensure delivering on Sanctuary’s mission. Understanding our own minds well enough to build one is one of the most difficult challenges people have ever taken on. But we think strategic investment in the right kind of ecosystem is necessary for success.

To date we haven’t talked much publicly about what we are doing, and how we are doing it. This isn’t because we wanted to keep anything secret. We just wanted to focus on building the thing.

Now that we have gotten the technology to a point where many of the key challenges are understood, we are going to spend more time talking about what we are doing and where technologies related to human-level AI really are. We will provide regular updates on progress towards our objective that will be accessible to everyone. This technology will affect every person on the planet. It’s important for people to understand what it is and isn’t, when it’s likely to arrive, and what that will look like.